Ruidi Chang

Department of Computer Science

Address: Duncan Hall, 6100 Main St, Houston, TX 77005

Email: rc151@rice.edu

About Me

I’m a second-year CS Ph.D. student at Rice University, advised by Prof. Hanjie Chen. I’m interested in interpretable machine learning—especially understanding how language models behave and process information to make decisions. Before Rice, I earned my master’s degree at Carnegie Mellon University.

Publications

-

Steering Information Utility in Key-Value Memory for Language Model Post-Training

Chunyuan Deng, Ruidi Chang, Hanjie Chen

Advances in Neural Information Processing Systems (NeurIPS), 2025. -

Learning Distribution-wise Control in Representation Space for Language Models

Chunyuan Deng, Ruidi Chang, Hanjie Chen

International Conference on Machine Learning (ICML), 2025. -

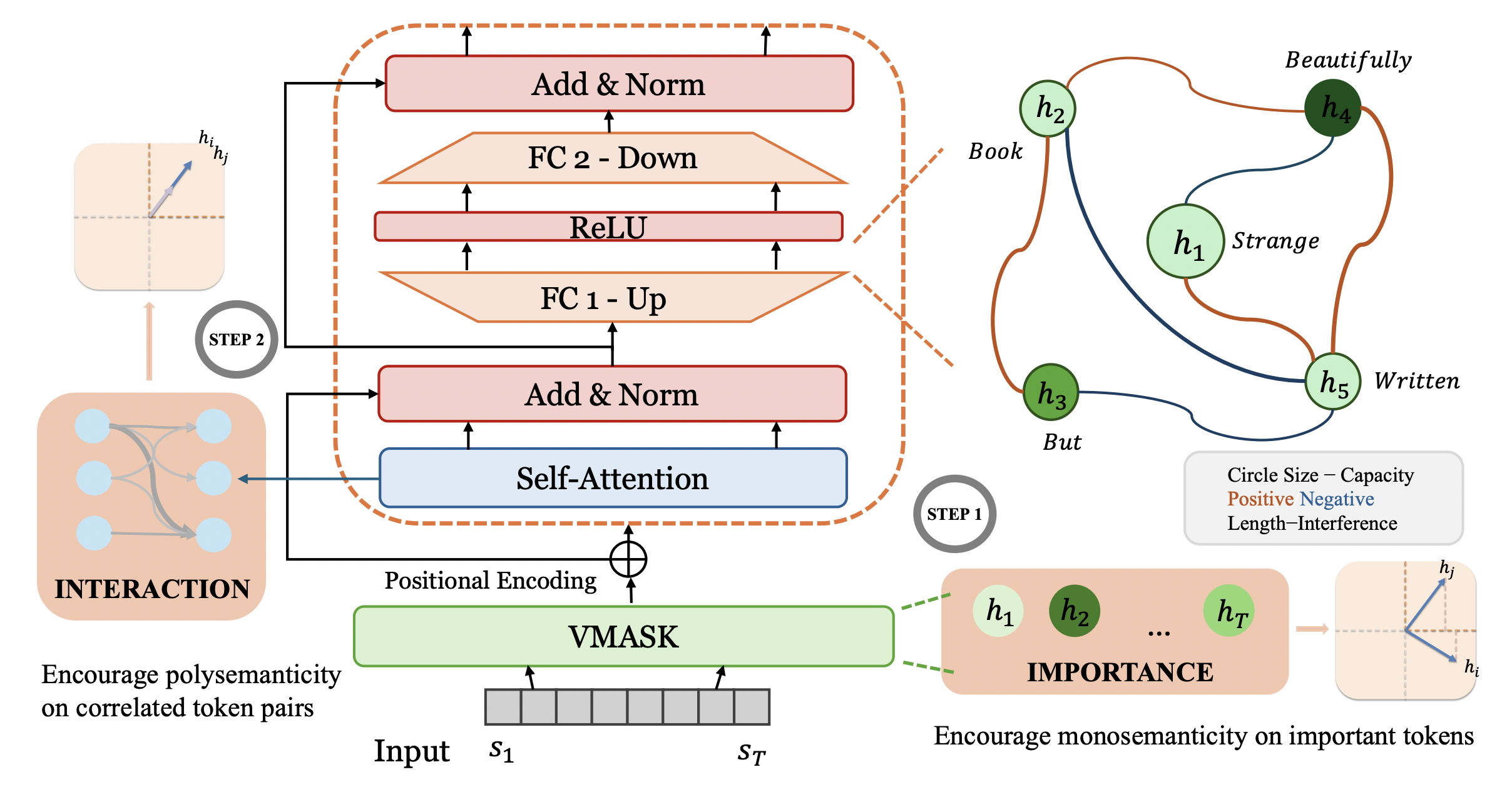

SAFR: Neuron Redistribution for Interpretability

Ruidi Chang, Chunyuan Deng, Hanjie Chen

North American Chapter of the Association for Computational Linguistics (NAACL) Findings, 2025.🧠 Neurons often mix too many features (superposition) — making models a black box.

🎯 SAFR strategically redistributes neurons:

- Monosemantic for important tokens

- Polysemantic where interactions matter

SAFR improves interpretability. -

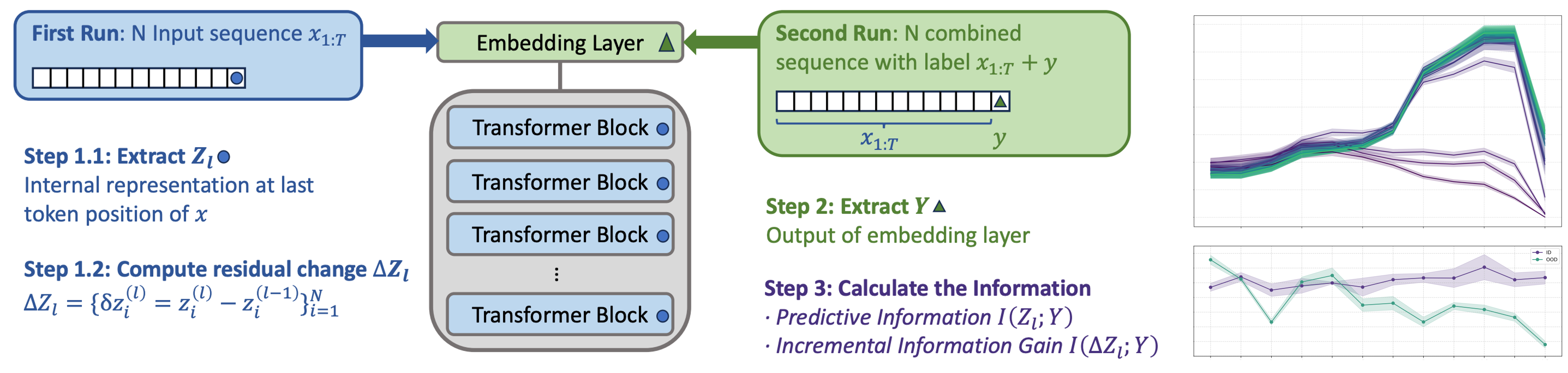

The Generalization Ridge: Information Flow in Natural Language Generation

Ruidi Chang, Chunyuan Deng, Hanjie Chen

arXiv preprint, 2025🔍 Models aren’t just monotonically better deeper, they show a ridge of generalization in intermidiate layers.

✂️ InfoRidge is an information-theoretic lens to trace how predictive information flows across depth.

- Predictive information peaks in upper-middle layers (the “ridge”), where models exhibit stronger generalization behavior, then drops in final layers.

- Residual scaling probes show that under distribution shift, models downweight deep layers and rely more on ridge layers.

InfoRidge identifies the generalization ridge — an intermidiate-layer peak in predictive information. -

Large Language Model Based Multi-Agents: A Survey of Progress and Challenges

Taicheng Guo, Xiuying Chen, Yaqi Wang, Ruidi Chang, Shichao Pei, Nitesh V. Chawla, Olaf Wiest, Xiangliang Zhang

International Joint Conference on Artificial Intelligence (IJCAI), 2024. -

Language Models are Symbolic Learners in Arithmetic

Chunyuan Deng, Zhiqi Li, Roy Xie, Ruidi Chang, Hanjie Chen

In submission (Transactions on Machine Learning Research, TMLR).

Services

- Reviewer: ACL ARR 2025 Feb, IEEE BigData 2025, EMNLP BlackboxNLP Workshop 2025, COLM XLLM-Reason-Plan Workshop 2025, COLING 2025

- Volunteer: EMNLP BlackboxNLP Workshop 2024

- Mentoring: Nursultan Asilbekov (SURF Program)